While I was laying in the hammock during the midsummer weekend I let my mind wander free.

Suddenly my free mind picked up the path that led me to think about past integration projects and what I can

learn from them.

I have been involved more than a decade in developing integrations from and to the complex applications. I strongly agree the idea of

making integrations with vendor independent

standard ways. Best approach, because of strong standardisation, so far have been to use Web Services (WS) as much as possible to consume services, fetch from or upload data

to applications.

At the beginning we had no previous experience about

designing WS's. We did services what we thought --at that time- to be suitable, but now we have learned that certain

things could be done differently.

First of all GUI, business logic and WS part of the application should be packed into their own (J2EE) applications and persistences. This allows better possibilities to update user experience and business

logic part while WS part remains the same

and can be up and running while the service break for the others. Especially if you are developing homemade application with agile, tight scheduled intervals it could be usefull not to stop WS part (connectivity services) or not to use energy to build complex retry logic to middleware / ESB implementations to support product updates. And if the WS part is done like I propose below, the real business logic and

data models could be updated too without touching the integrations or information objects used to interact with service consumers / clients. Separated GUI, business logic and WS parts can be also scaled individually based on the realised workloads.

Second lesson learned is that application specific and the other

perhaps more common services the project produces should not be mixed in the same

application and persistences. They should be held strictly separated in

their own. Reasoning can be the same as above.

The level of the abstraction and granulation of the WS:s were deeply discussed in the past, but now we have learned that in some projects we chose to publish too

elementary services. For example in one project one

needed to use several services / calls to upload data to the application. It was fine

when there were a few connected systems and users. When volumes

increased there were too much xml -parsing and serialization and logic

processing for the one J2EE application that provided also web interface

for the users at the same time. There were severe problems with application server resources (memory and cpu) and the response times for the WS:s calls and it

caused unnecessary errors (time outs or internal server error responses) and management work

to the middleware / ESB layer too.

Near time instead of the real time!

From the middleware / ESB layer's point of view the interface should be

fast and reliable also with great volumes. So with the applications that

applies complex computing (like route calculation) to the data it receives or produces one should

consider to separate the real time application data from the data WS -layer (connectivity services) inserts or updates.

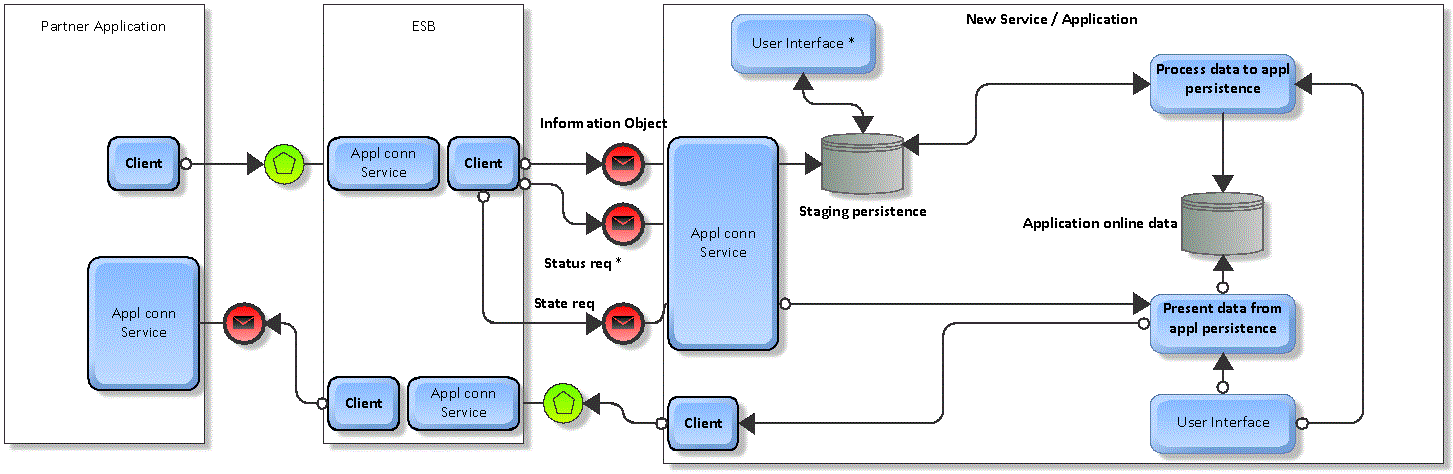

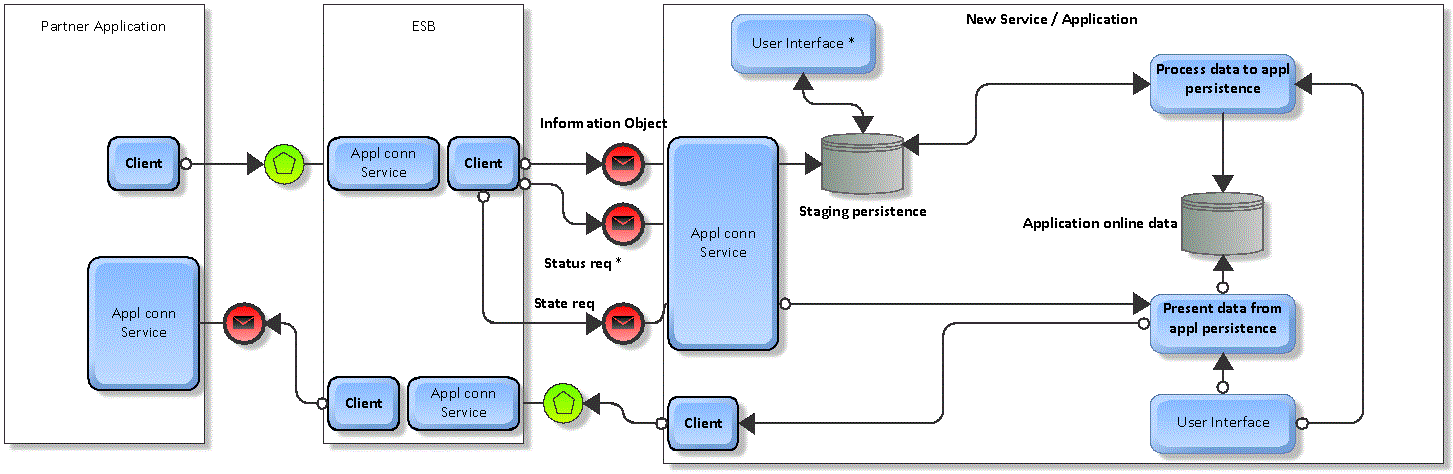

My suggestion is that one consider to use the following model --idea copied from the SAP- for the complex

applications.

-

Received data will just be put to the persistence to wait for the

complex processing and uploading to the application. Middleware / ESB layer

gets the acknowledgement back that data were received by the WS part (connectivity service) and the status of the processing can be tracked with id xxxx.

-

Just the syntax of the received data should be validated against the

schema while interacting with the middleware / ESB layer, not the content.

-

Own services / operations should be provided for tracking the status of the complex data import / update to the application.

-

Perhaps there could be usefull to implement a GUI from which business users can manually edit and reprocess failed / incomplete data

from the WS part (connectivity service) to the application.

-

Perhaps there should also be caches for the data produced or published with complex

business logic. Cached preprocessed data could then be published with simple and light logic with great

throughput.

In the picture above I try to express ideal architecture that helps to make maintainable, scalable and reliable application from middleware / ESB layer point of view.